My great regret is that I always have very little time to write posts, and the emptiness of this blog does not reflect the numerous, great and stimulating scientific events and opportunities that I have enjoyed throughout 2024. As a last-minute remedy (with a promise to do better next year…hopefully), I try to summarize the landmarks here, month by month.

In January, I launched the Voices from Online Labour (VOLI) project, which I coordinate with a grant of about €570,000 from the French National Agency for Research. This four-year initiative brings together expertise from sociology, linguistics, and AI technology across multiple institutions, including four French research centres, a speech technology company, and three international partners.

In February with the Diplab team, I spent two exciting days at the European Parliament in Brussels, engaging in profound discussions with and about platform workers as part of the 4th edition of the Transnational Forum on Alternatives to Uberization. I chaired a panel with data workers and content moderators from Europe and beyond, aiming to raise awareness about the difficult working conditions of those who fuel artificial intelligence and ensure safe participation to social media.

In March, three publications saw the light. One is a solo-authored chapter, in French, on ‘Algorithmes, inégalités, et les humains dans la boucle‘ (Algorithms, inequalities, and the humans in the loop) in a collective book entitled ‘Ce qui échappe à l’intelligence artificielle‘ (What AI cannot do). The other two are journal articles that may seem a little less close to my ‘usual’ topics, but they are important because they constitute experiments in research-informed teaching. One is a study of the 15-minute city concept applied to Paris, realized in collaboration with a colleague, S. Berkemer of Ecole Polytechnique, and a team of brilliant ENSAE students. The other is an analysis of the penetration of AI into a specific field of research, neuroscience, showing that for all its alleged potential, it created a confined subfield but did not entirely disrupt the discipline. The study, part of a larger project on AI in science, was part of the PhD research of S. Fontaine (who has now got his degree!), also co-authored with his co-supervisors F. Gargiulo and M. Dubois.

In April, I co-published the final report from the study realized for the European Parliament, ‘Who Trains the Data for European Artificial Intelligence?‘. Despite massive offshoring of data tasks to lower-income countries in the Global South, we find that there are still data workers in Europe. They often live in countries where standard labour markets are weaker, like Portugal, Italy and Spain; in more dynamic countries like Germany and France, they are often immigrants. They do data work because they lack sufficiently good alternative opportunities, although most of them are young and highly educated.

I then attended two very relevant events. On 30 April-1 May, I was at a Workshop on Driving Adoption of Worker-Centric Data Enrichment Guidelines and Principles, organised by Partnership on AI (PAI) and Fairwork in New York city to bring together representatives of AI companies, data vendors and platforms, and researchers. The goal was to discuss options to improve working conditions from the side of the employers and intermediaries. On 28 May, I was in Cairo, Egypt, to attend the very first conference of the Middle East and Africa chapter of INDL (International Network on Digital Labour), the research network I co-founded. It was a fantastic opportunity to start opening the network to countries that were less present before, and whose voices we would like to hear more.

June also provided exciting opportunities, with a workshop on ‘The Political Economy of Green-Digital Transition‘ at LUT University in Lappeenranta, Finland.

In July, the final version of our article on ‘Who bears the burden of a pandemic? COVID-19 and the transfer of risk to digital platform workers‘ came out in American Behavioral Scientist.

August is a quieter month (but I greatly enjoyed a session at the Paralympics in Paris!), so I’ll jump to September. Lots of activities: a trip to Cambridge, UK, and a workshop on disinformation at the Minderoo Centre for Technology and Democracy; a workshop on Invisible Labour at Copenhagen Business School in Denmark; and a one-day conference on gender in the platform economy in Paris. Another publication came out: a journal article, in Spanish, on Argentinean platform data workers.

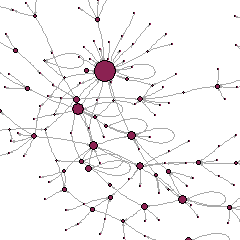

More publications in October: a book chapter, in Portuguese, on ‘Fabricar os dados: o trabalho por trás da Inteligência Artificial‘, and a journal article, in French, on the ethics and methodology of using graph visualizations in fieldwork (an older topic to which I’m still attached – and which takes renewed importance with today’s fast renewal of research ethics!).

At the end of October, and until mid-November, I travelled to Chile for the seventh conference of the International Network on Digital Labour (INDL-7), which I co-organised. It was an immensely rewarding experience. I took the opportunity to strengthen my linkages and collaborations with colleagues there. It was a very intense, and super-exciting, time: after INDL-7 (28-30 October), I spent a week in Buenos Aires, Argentina, where I co-presented work in progress at the XV Jornadas de Estudios Sociales de la Economía, UNSAM. I then returned to Chile where I gave a keynote at the XI COES International Conference in Viña del Mar, Chile, on 8 November, and another at the ENEFA conference in Valdivia (Chile) on 14 November. I also gave a talk as part of the ChiSocNet series of seminars in Santiago, 11 November.

December was my return to teaching… and planning for the new year! Of note, I was interviewed for a Swiss podcast.