On Friday last week, the British Sociological Association (BSA) held an event on “The Challenge of Big Data” at the British Library. It was interesting, stimulating and relevant – I was particularly impressed by the involvement of participants and the very intense live-tweeting, never so lively at a BSA event! And people were particularly friendly and talkative both on their keyboards and at the coffee tables… so in honour of all this, I am choosing the hashtag of the day #bigdataBL as title here.

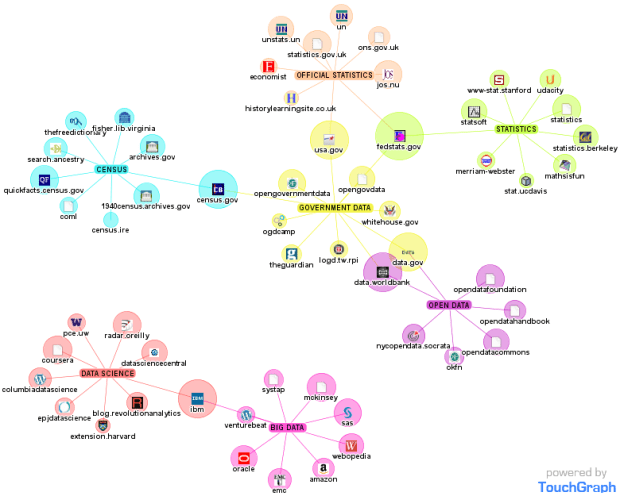

(Visualisation: http://www.digitalcoeliac.com/)

(Visualisation: http://www.digitalcoeliac.com/)

Some highlights:

- The designation of “big data” is from industry, not (social) science, said a speaker at the very beginning. And it is known to be fuzzy. Yet it becomes a relevant object of scientific inquiry in that it is bound to affect society, democracy, the economy and, well, social science.

- Big-data practices change people’s perception of data production and use. Ordinary people are now increasingly aware that a growing range of their actions and activities are being digitally recorded and stored. Data are now a recognized social object.

- Big data needs to be understood in the context of new forms of value production.

- So, social scientists need to take note (and this was the intended motivation of the whole event). The complication is that Big Data matter for social science in two different ways. First, they are an object of study in themselves – what are their implications for, say, inequalities, democratic participation, the distribution of wealth. Second, they offer new methods to be exploited to gain insight into a wide range of (traditional and new) social phenomena, such as consumer behaviours (think of Tesco supermarket sales data).

- Put differently, if you want to understand the world as it is now, you need to understand how information is created, used and stored – that’s what the Big Data business is all about, both for social scientists and for industry actors.