Twitter conversations are one way through which participants in an event engage with the programme, comment and discuss about the talks they attend, prolong questions-and-answers sessions. Twitter feeds have become part of the official communication strategy of major events and serve documentation and information purposes, both for attendees and for outsiders. While tweeting is becoming more an more a prerogative of “official” accounts in charge of event communication, it is also a potential tool in the hands of each participant, allowing anyone to join the conversation at least in principe. Participants may become aware of each other, perhaps using the opportunity of the event to meet face-to-face, start relationships and even collaborations. A Nesta study insisted on the potential for using social media data to attain a quantitative understanding of events and their impacts on participants’ networks.

The OuiShare Fest 2016, a major gathering of the collaborative economy community that took place last week in Paris, was one opportunity to see such mechanisms in place. Tweeting was easy – with an official hashtag, #OSFEST16, although related hashtags were also widely used. I mined a total of 12440 tweets over the four days of the event. Do Twitter conversations related to the Fest bring to light the emergence of a community? While it’s too early for any deep analysis, some descriptive results can already be shown.

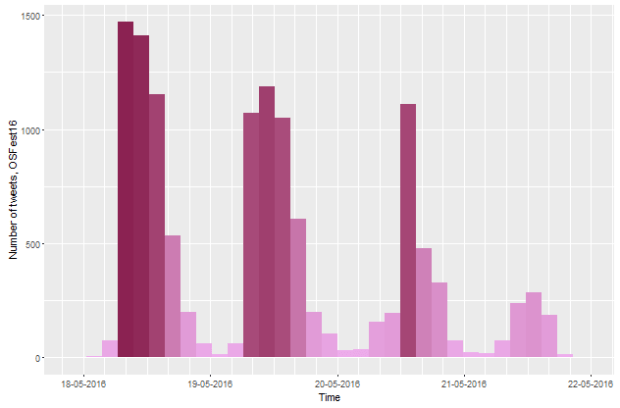

First, when did people tweet? Mostly at the beginning of each day’s programme (9am on the first two days, 2pm on the third day). Tweeting was more intense in the first day and declined over time (Figure 1). The comparatively low participation on the fourth day is due to the fact that the format was different – an open day in French (rather than an international conference in English), whereby local people were free to come and go. Online activity is not independent of what happens on the ground – quite on the contrary, it follows the timings of physical activity.

Who tweeted most? Our dataset has a predictable outlier, the official @OuiShareFest Twitter account, who published 727 tweets – twice as many as the second in the ranking. But let’s look at the people who had no obligation to tweet, and still did so: who among them contributed most to documenting the Fest? Figure 2 shows the presence of some other institutional accounts among the top 10, but the most active include a few individual participants. Ironically, one of them was not even physically present at the Fest, and followed the live video streaming from home. In this sense, Twitter served as an interface between event participants and interested people who couldn’t make it to Paris.

What was the proportion of tweets, replies and retweets? Original tweets are interesting for their unique content (what are people talking about?), while replies and retweets are interesting because they reveal social interactions – dialogue, endorsement or criticism between users. Figure 3 shows that the number of replies is small compared to tweets and retweets.

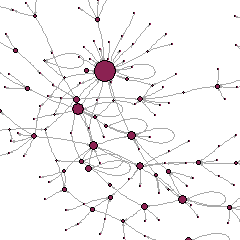

Let’s now look closer at the replies. By taking who replied to whom, we can build a social network of conversations between a group of tweeters. It’s a relatively small network of 311 tweeters (the coloured points in Figure 4), with 321 ties among them (the lines in Figure 4). The size of points depends on the number of their incoming ties, that is, the number of replies received: even if the points haven’t been labelled, I am sure you can tell immediately which one represents the official @OuiShareFest account… the usual suspect! But let’s look at the network structure more closely. Some ties are self-loops, that is, people replying to themselves. (Let’s be clear, it’s not a sign of social isolation, but simply a consequence of the 140-character limit imposed on Twitter: self-replies are meant to deliver longer messages). A lot of other participants are involved in just simple dyads or small chains (A replies to B who replies to C, but then C does not reply to A), unconnected to the rest. There is a larger cluster formed around the most replied-to users: here, some closure becomes apparent (A replies to B who replies to C who replies to A) and enables this sub-network to grow.

Now, my own experience of tweeting at the Fest suggested that tweets were multilingual. Apart from the fourth day, there seemed to be a large number of French-speaking participants. A quick-and-dirty (for now) language detection exercise revealed that roughly 60% of tweets were in English, 25% in French, the rest being split between different languages especially German, Spanish, and Catalan. So, did people reply to each other based on the language of their tweets? It turns out that quite a few tweeters were involved in conversations in multiple languages. Figure 5 is a variant of Figure 4, colouring nodes and ties differently depending on language. A nice mix: interestingly, the central cluster is not monolingual and in fact, is kept together by a few, albeit small, multi-lingual tweeters.

Let’s turn now to mentions: who are the most mentioned tweeters? Again, I’ll take out of the analysis @OuiShareFest, hugely ahead of anyone else with 832 mentions received. Below, Figure 6 ranks the most mentioned: mostly companies (partners or sponsors of the event such as MAIF), speakers (such as Nathan Schneider, Nilofer Merchant), and key OuiShare personalities (such as Antonin Léonard). Mentions follow the programme of the event, and most mentioned are people and organizations that play a role in shaping it.

Mentions are also a basis to build another social network – of who mentions whom in a tweet. This will be a larger network compared to the net of replies, as mentions can be of many types and also include retweets (which as we saw above, are very numerous here). There are 17248 mentions (some of which are repeated more than once) in the network. They involve 796 users who mention others and are mentioned in turn; 550 users who are mentioned, but do not mention themselves; and 1680 users who mention others, but are not themselves mentioned.

A large network such as this is more difficult to visualize meaningfully, and I had to introduce some simplifications to do so. I have included only pairs in which one had mentioned the other at least twice: this makes a network of 778 nodes with 2222 ties. The color of nodes depends on their modularity class (a group of nodes that are more connected with one another, than with any other nodes in the network) and their size depends on the number of mentions received. You will clearly recognize at the center of the network, the official @OuiShareFest account, which structures the bulk of the conversations. But even intuitively, other actors seem central as well, and their role deserves being examined more thoroghly (in some future, less preliminary analysis).

This analysis is part of a larger research project, “Sharing Networks“, led by Antonio A. Casilli and myself, and dedicated to the study of the emergence of communities of values and interest at the OuiShare Fest 2016. Twitter networks will be combined with other data on networking – including informal networking which we are capturing through a (perhaps old-fashioned, but still useful!) survey.

The analyses and visualizations above were done with the packages TwitteR and igraph in R; Figure 7 was produced with Gephi.